AI Generating Real-Time Emotional Soundtracks for Digital Experiences

AI Generating Real-Time Emotional Soundtracks for Digital Experiences

The fusion of artificial intelligence with music has given rise to a transformative trend: AI generating real-time emotional soundtracks for digital experiences. This innovative application of AI is changing how we interact with media, offering an enhanced sensory experience tailored to individual emotions and contexts. This post explores the emerging realm of emotionally intelligent soundscapes, their applications, and future potential.

—

The Rise of AI in Music Composition

In recent years, AI has made significant strides in the music industry, pushing boundaries in composition, production, and performance. From algorithms that create symphonies in the style of the great masters to AI DJs curating live event playlists, technology is reshaping our auditory landscape. One of the most captivating developments is the ability to craft soundtracks that dynamically respond to the real-time emotional states of users.

—

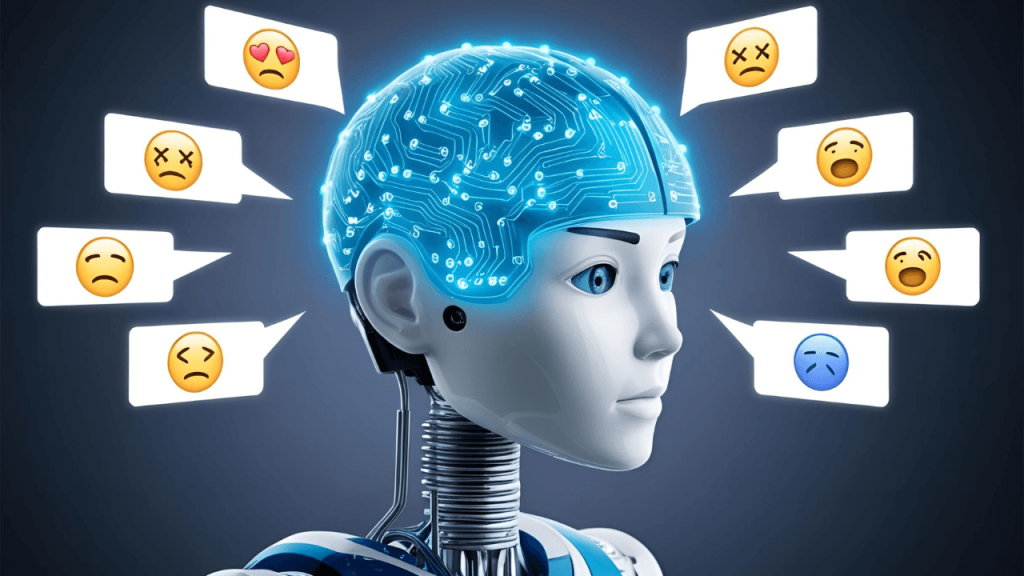

Understanding Emotional Soundscapes

An emotional soundtrack is a carefully curated sequence of music or sounds that evoke or complement specific feelings. Traditional compositions rely heavily on a composer’s skill to elicit desired emotional responses. However, AI-driven applications take this concept further by analyzing data in real-time to produce soundscapes that resonate with the user’s current emotional state or the atmosphere of a digital environment.

—

How AI Creates Real-Time Emotional Soundtracks

The process begins with emotion-sensing technology, which might involve biometrics such as heart rate monitors, facial recognition software, or voice analysis. This data is fed into sophisticated algorithms trained to recognize emotional cues. By interpreting these cues, AI systems can generate a soundtrack designed to enhance or counteract the detected emotions, providing a seamless auditory experience.

Machine Learning and Deep Learning models play crucial roles, being trained on vast datasets of musical compositions and emotional responses. These models learn to understand the complexities of musical elements like tempo, key, and rhythm and their psychological impacts, enabling them to create or curate music that aligns with the desired emotional outcome.

—

Applications in Digital Lifestyle

1. **Virtual Reality and Gaming:**

In the world of VR and gaming, real-time emotional soundtracks heighten the immersive experience. Imagine playing a game where the background score intensifies as your in-game character faces danger or softens during moments of reflection, all driven by your physiological responses and gameplay progression.

2. **Remote Work and Productivity:**

AI-generated music can also significantly benefit remote work environments. By analyzing a user’s stress levels or concentration levels through wearable tech, AI can create soundtracks that stimulate focus or provide relaxation, thus enhancing productivity and mental well-being.

3. **Healthcare and Therapy:**

In therapeutic settings, these technologies can assist in creating calming environments for patients with anxiety or PTSD by adapting music to suit therapeutic needs spontaneously, making sessions more effective.

4. **Personalized Content Consumption:**

Streaming platforms can harness AI to offer users soundtracks that match their current mood or enhance the emotional pull of a narrative, be it a movie, podcast, or even an e-book.

—

Challenges and Ethical Considerations

The introduction of AI-generated emotional soundtracks poses several challenges. Privacy is a significant concern, as the system requires access to personal emotional data. Ensuring data protection and transparency about how this data is used is critical. Additionally, there is the artistic question of authenticity. Will AI compositions lack the emotive human touch that defines so much of our connection to music?

Moreover, the potential for AI to manipulate emotions raises ethical concerns. Developers must ensure that this technology is used responsibly, promoting user well-being rather than exploitation.

—

The Future of AI in Emotional Soundscapes

The future of AI in generating emotional soundtracks is promising, with ongoing advancements likely to address current limitations. Future developments may include more sophisticated emotion detection, improved music personalization, and integration into a broader range of applications from wellness apps to educational tools.

As AI evolves, so will its capability to create more nuanced and effective soundscapes, continually pushing the envelope of how we experience digital media. Collaborations between musicians, tech developers, and psychologists are likely to drive this evolution, ensuring that these soundscapes are not only technologically advanced but also emotionally intelligent and artistically fulfilling.

—

Conclusion

AI-driven real-time emotional soundtracks represent a significant leap forward in the personalization of digital experiences. By merging cutting-edge technology with the timeless power of music, we are opening new avenues for how we engage with digital environments. As with all technological innovations, it’s essential to navigate these developments with care, balancing technological possibilities with ethical responsibilities.

The potential to create individually tailored auditory experiences that adapt to our emotions in real-time is not just a technological marvel but a journey into understanding the symbiotic relationship between human emotion and sound. This progression promises to redefine how we perceive music in our daily digital lives, offering a new dimension of interactive and immersive experiences.